The quest for Trustworthy AI is high on both the political and the research agenda, and it actually constitutes TAILOR’s first research objective (H1) of developing the foundations for Trustworthy AI. It is concerned with designing and developing AI systems that incorporate the safeguards that make them trustworthy, and respectful of human agency and expectations. Not only the mechanisms to maximize benefits, but also those for minimizing harm. TAILOR focuses on the technical research needed to achieve Trustworthy AI while striving for establishing a continuous interdisciplinary dialogue for investigating the methods and methodologies to fully realize Trustworthy AI.

Learning, reasoning and optimization are the common mathematical and algorithmic foundations on which artificial intelligence and its applications rest. It is therefore surprising that they have so far been tackled mostly independently of one another, giving rise to quite different models studied in quite separated communities. AI has focussed on reasoning for a very long time, and has contributed numerous effective techniques and formalisms for representing knowledge and inference. Recent breakthroughs in machine learning, and in particular in deep learning, have, however, revolutionized AI and provide solutions to many hard problems in perception and beyond. However, this has also created the false impression that AI is just learning, not to say deep learning, and that data is all one needs to solve AI. This rests on the assumption that provided with sufficient data any complex model can be learned. Well-known drawbacks are that the required amounts of data are not always available, that only black-box models are learned, that they provide little or no explanation, and that they do not lend themselves for complex reasoning. On the other hand, a lot of research in machine reasoning has given the impression that sufficient knowledge and fast inference suffices to solve the AI problem. The well-known drawbacks are that knowledge is hard to represent, and even harder to acquire. The models are however more white-box and explainable. Today there is a growing awareness that learning, reasoning and optimization are all needed and actually need to be integrated. TAILOR’s second research objective (H2) is therefore to tightly integrate learning, reasoning and optimization. It will realize this by focusing on the integration of different paradigms and representations for AI.

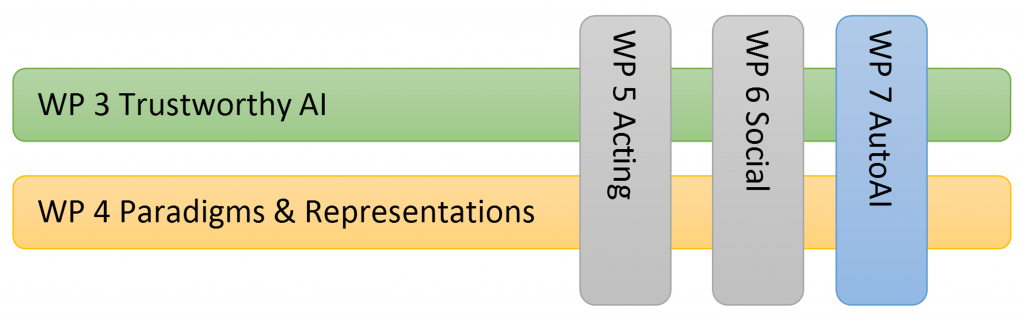

TAILOR’s two research objectives H1 and H2 are tightly connected to one another, as achieving Trustworthy AI is not possible without an integrated approach to learning, reasoning and optimisation, and, vice versa, AI frameworks that integrate these allow to address many of the requirements imposed by Trustworthy AI. Therefore, TAILOR takes a synergistic approach to addressing them.

TAILOR will pursue these two objectives into more specific and more tangible contexts, each of which corresponds to an area of research where Europe is at the forefront of research. They are also concerned with contexts where automation or autonomy is involved, which implies that trustworthy AI is required: V1) using AI to act autonomously in an environment, V2) using AI agents to act and learn in a society, i.e., to communicate, collaborate, negotiate and understand with other agents and humans, and V3) to democratize and automate AI systems, i.e., how to enable people with limited expertise to build, deploy, and maintain high-quality AI systems.

This explains the structure of the research program. We have two horizontal WPs, which correspond to the two research questions H1 and H2, we have three vertical WPs which correspond to the contexts V1, V2 and V3. All vertical WPs will address both Trustworthy AI aspects, as well as learning, reasoning and optimization.

Workpackages

| WP | Title | Lead Partner | WP Leader |

| 1 | Management, Governance and Assessment | LiU | Fredrik Heintz |

| 2 | Strategic Research and Innovation Roadmap | INRIA | Marc Schoenauer |

| 3 | Trustworthy AI | CNR | Fosca Gianotti |

| 4 | Integrating AI Paradigms and Representations | KU Leuven | Luc De Raedt |

| 5 | Deciding and Learning How to Act | UNIROMA | Guiseppe De Giacomo |

| 6 | Learning and Reasoning in Social Contexts | IST | Ana Paiva |

| 7 | Automated AI | ULEI | Holger Hoos |

| 8 | Industry, Innovation and Transfer program | DFKI | Philipp Slusallek |

| 9 | Network collaboration | UNIBRISTOL | Peter Flach |

| 10 | Connectivity Fund | TU/e | Joaquin Vanshoren |

| 11 | Coordination with AI on Demand platform | UCC | Barry O’Sullivan |

| 12 | Dissemination and Outreach | UNIBO | Michela Milano |

| 13 | Ethics requirements | LiU | Fredrik Heintz |