Large Language Models (LLMs) and Foundation Models have taken the world and even researchers by surprise for their capabilities, notwithstanding their limitations. This has spurred worldwide controversy about the development, the potentials and the risks of AI technologies in general.

There is no doubt that these technologies will have a relevant role in shaping our future, with their wide impact on markets and socioeconomics. There are both optimistic and catastrophic visions of such a future, but definitely the decisions that researchers and regulators will take today will have an impact on it.

At the European AI workshop on LLM/Foundation Models, on 17-18 October, 2023 in Amsterdam, (https://sites.google.com/view/ellisfms2023/home?authuser=0), prof. Giuseppe Attardi has organized a debate on a number of questions that the community must answer in order to take those decisions wisely and, as representative of such community, the audience had an opportunity to express its position.

A poll was taken before the debate and then the panellists have argued in favour or against each issue.

This is the list of issues and the names of the debaters:

1. Should we continue advancing Foundation Models or suspend their development?

Moshe Vardi (Rice University)

2. Do LLMs understand language and can they be made reliable?

Geoff Hinton (Toronto) vs Gary Marcus (NYU)

3. Should we regulate AI applications or AI corporations?

Brando Benifei (co-rapporteurs European AI Act) vs Jacopo Pantaleoni (former Nvidia Principal Scientist)

4. Are “Openly Licensed” models or efficient fine-tuning approaches sufficient to democratise AI?

5. Can models be controlled or guardrails incorporated in their training?

Yoshua Bengio (Univ. of Montreal)

The full video of the debate is available at: https://youtu.be/U5BmixXbW7A

Results

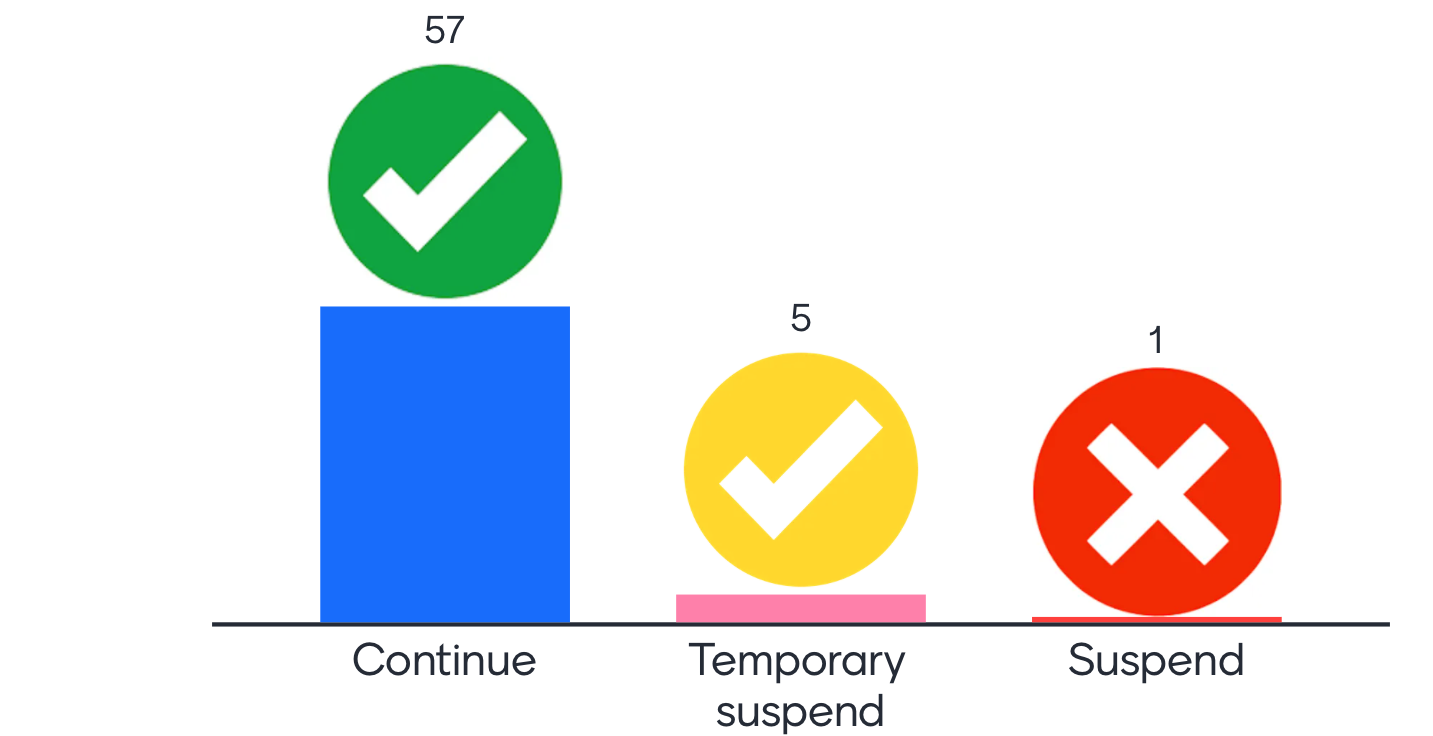

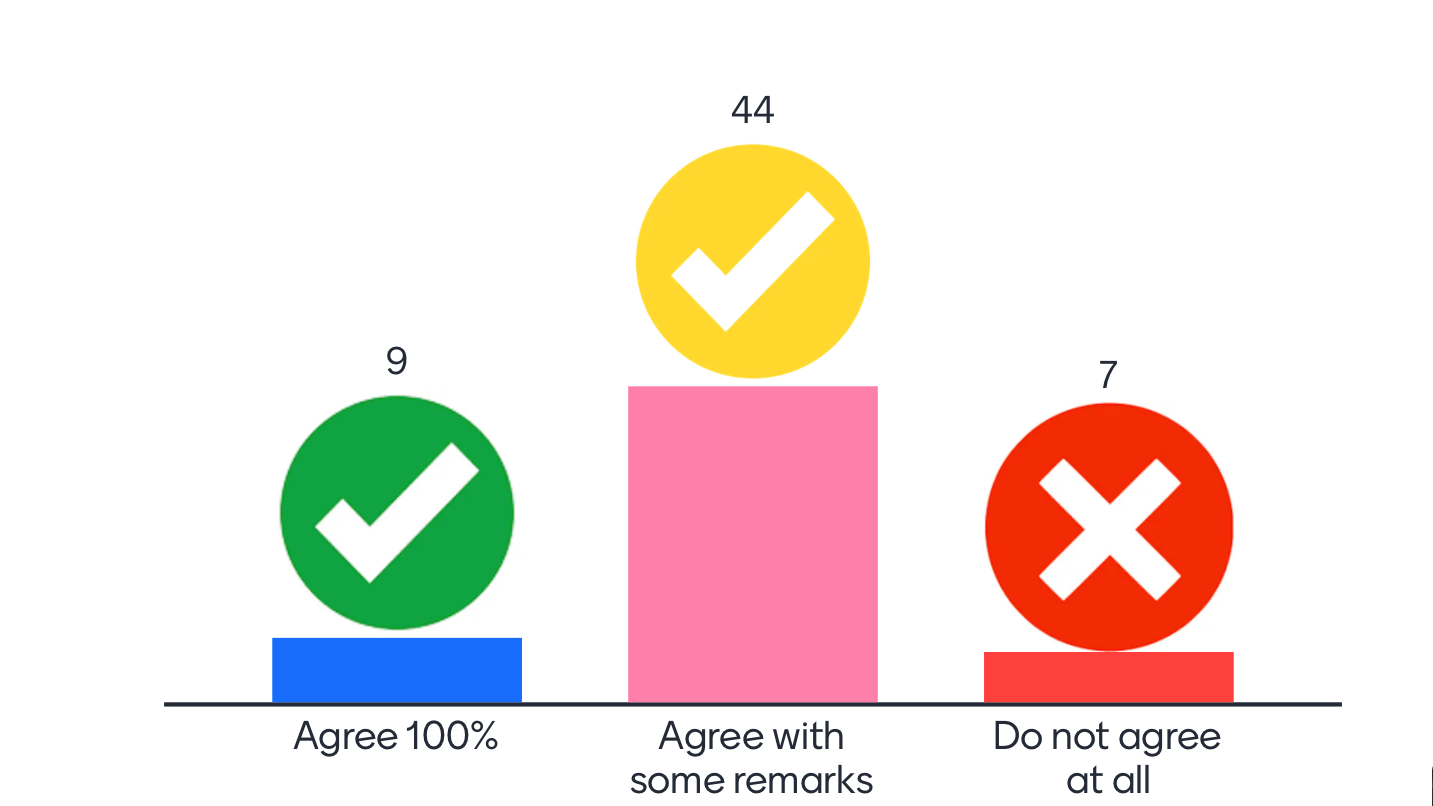

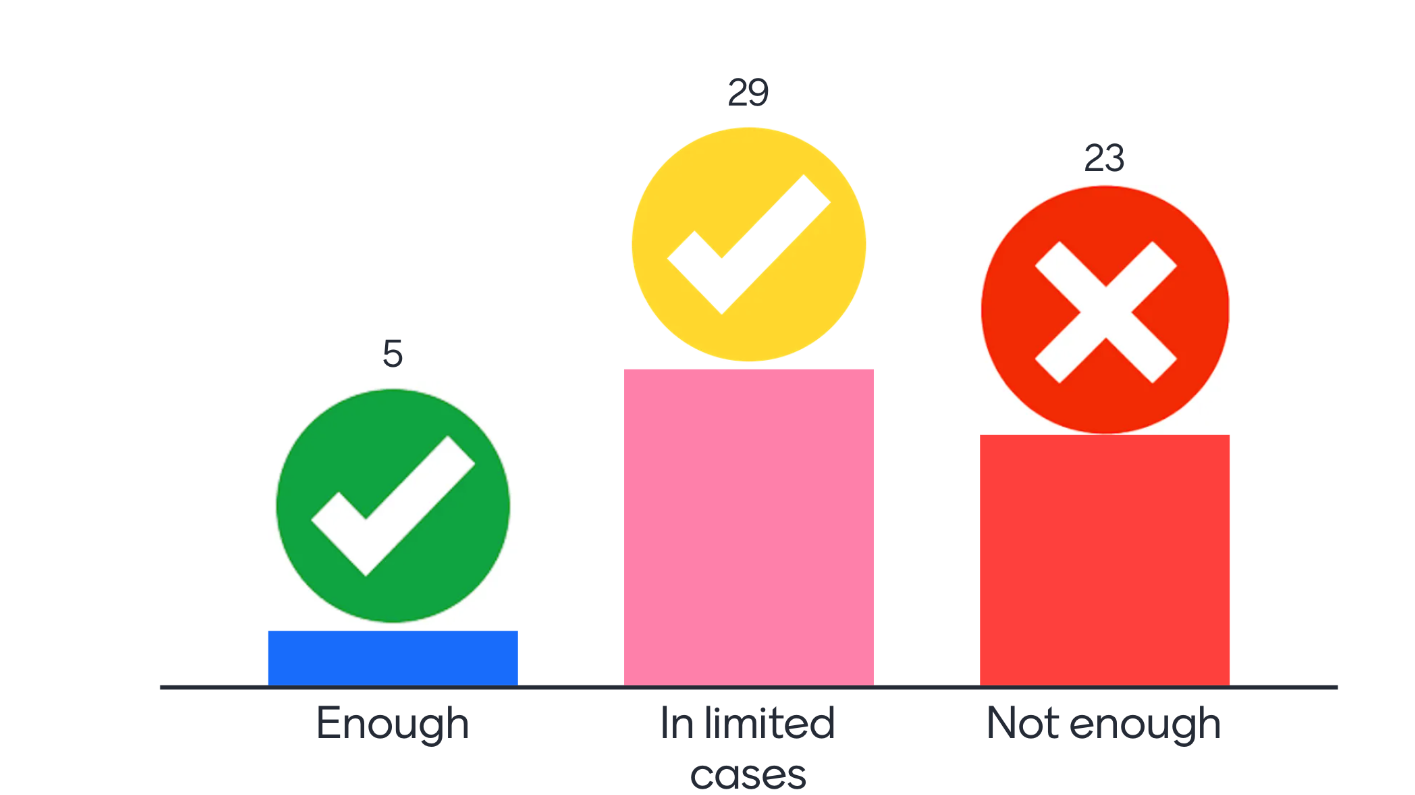

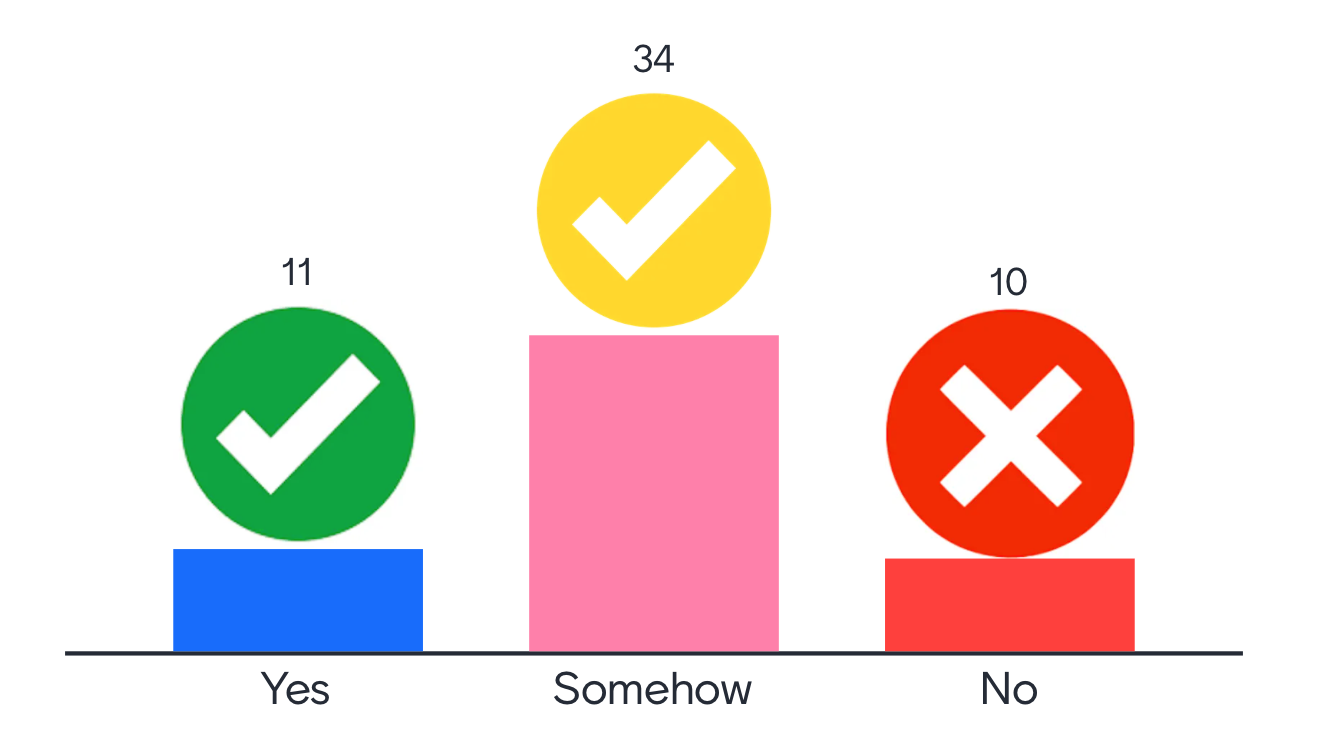

The results of the poll before the debate show that a large majority wishes to continue on the development of LLMs, with some skepticism on the effectiveness of Openly-sourced models, while on other issues there was a tie with the middle answer obtaining most votes:

Should we continue advancing Foundation Models or suspend their development?

Do LLMs understand language and can they be made reliable?

Should we regulate Al or Al corporations?

Are “Openly Licensed” models or efficient fine-tuning approaches sufficient to democratise Al?

Can guardrails be incorporated within model training?

Credits: Article written by Prof. Giuseppe Attardi (University of Pisa)