Continual Self-Supervised Learning

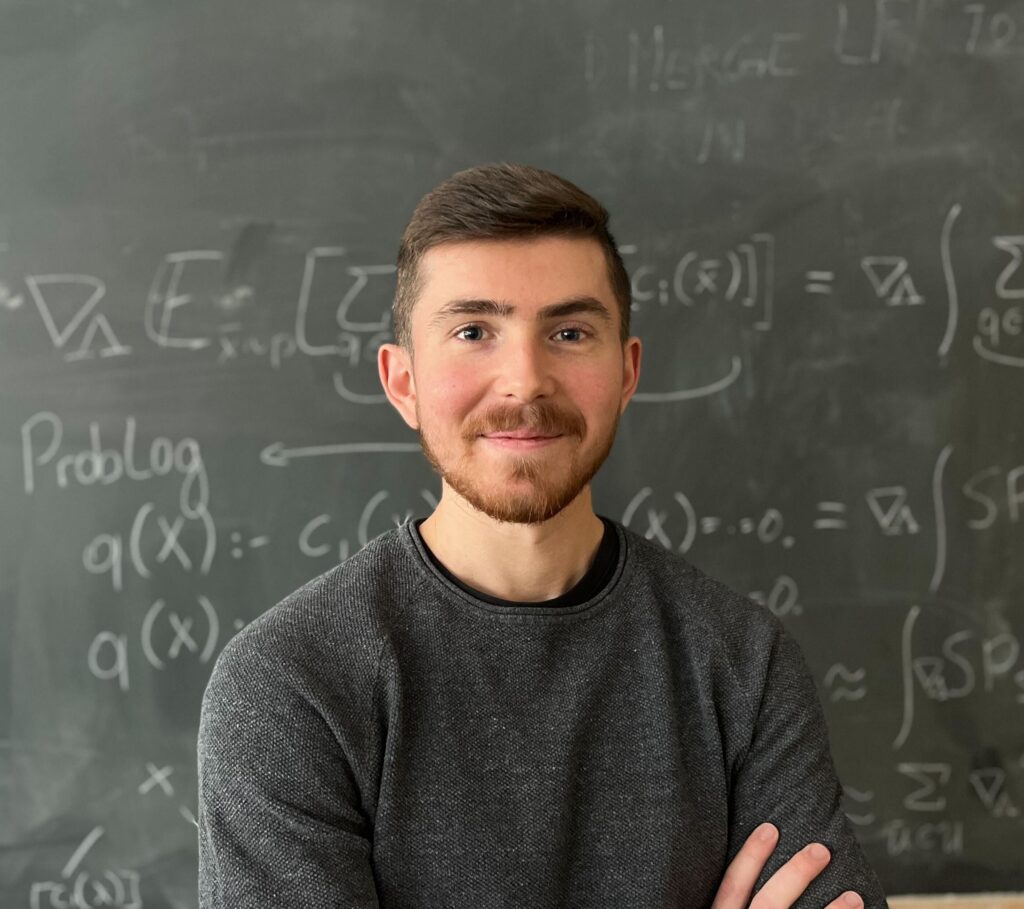

Giacomo Cignoni Research Fellow at the University of Pisa Learning continually from non-stationary data streams is a challenging research topic of growing popularity in the last few years. Being able to learn, adapt and generalize continually, in an efficient way appears to be fundamental for a more sustainable development of Artificial Intelligent systems. However, research […]

Continual Self-Supervised Learning Read More »