Manfred Jaeger

Associated Professor at Aalborg University

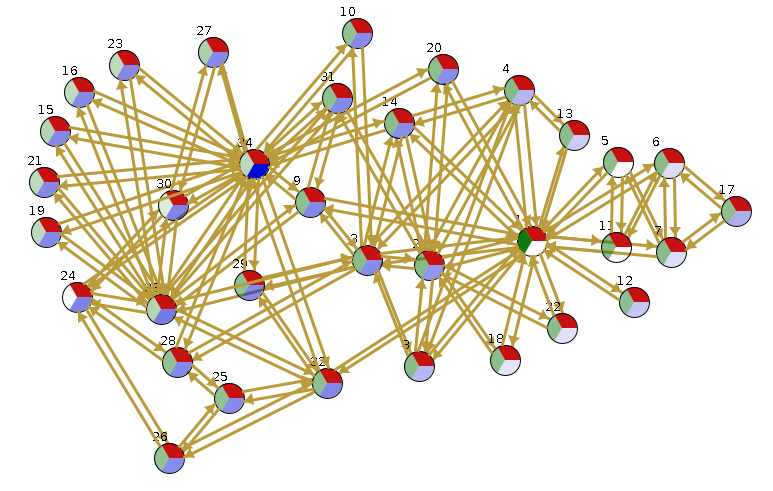

From Social networks to bibliographic databases: many important real-world phenomena consist of networks of connected entities. The mathematical model of such networks is that of a graph, which in its basic form just consists of a collection of nodes that are connected by edges. To model the rich structure and information that is available in real networks, this basic graph model can be enriched with additional information ranging from a single attribute ‘gender’ for a node in a social network, to an associated video file for a camera that is part of a sensor network.

Artificial intelligence and machine learning have developed several ways to use rich and complex network data for analyzing network properties, and for making predictions about unknown quantities. Under the name of “statistical relational learning” one line of research has focused on logic-based, interpretable representations of probabilistic dependencies among network entities. The relatively novel approach of “graph neural networks”, on the other hand, uses complex numeric functions for building powerful prediction models.

Both approaches have complementary strengths and weaknesses: the statistical relational learning approach provides more interpretable models, allows for the construction of models by a combination of expert knowledge and automated machine learning, and supports a greater variety of analysis and prediction tasks. The neural network approach, on the other hand, often leads to models with excellent predictive capabilities, and can more easily handle the large quantities of data that are generated by complex networks.

The goal of this research visit is to initiate a longer term research program on integrating statistical relational and neural approaches for learning and reasoning with graph data, such that the interpretability and flexibility of the former can be combined with the numerical capabilities of the latter.